Edge handling#

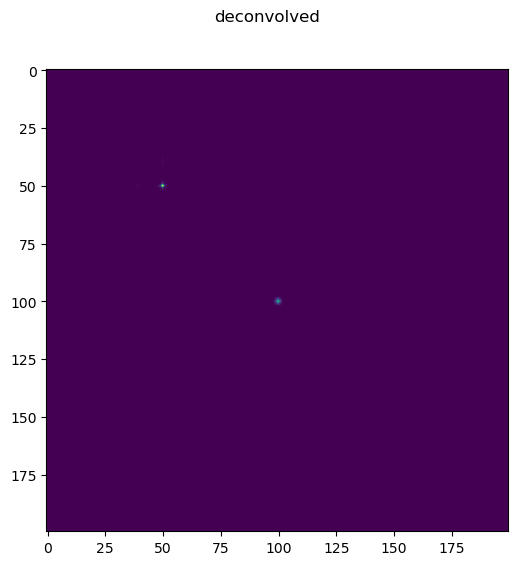

Edge handling is a very important aspect of deconvolution. If edges are not handled properly severe artifacts can occur when deconvolving. If edges are handled properly these artifacts are minimized, and in fact, as this example shows it may even be possible to reconstruct signal slightly beyond the acquired field of view (though keep in mind this example assumes no noise, so in real conditions it is harder to reconstruct signal outside the boundaries).

This example has been designed to work in a conda environment that has numpy, matplotlib, scipy and skimage, and shouldn’t need complicated dependencies.

Edge handling math background#

I had a great conversation with Andrew York recently about framing Richardson Lucy as a general tool that can be used to solve different types of problems by normalizing the RL equation by a factor \(H^T ones\) where \(H\) is the forward model of the system.

I have been doing something similar for edge handling since 2013, based on technical notes from the 2014 deconvolution grand challenge.

Below is a justificaton for a simple modification to the Richardson Lucy algorithm which can be used to handle edges and also reconstruct a small amount of structure outside the imaging window. In this case I assume no noise, let \(o_k\) be the object estimate at iteration \(k\), \(i\) be the observed image, \(h\) the point spread function. and \(n\) be an unknown normalization factor.

To avoid the convolutions wrapping we extend the image by zeros by a factor of psf_size/2+1 in each dimension. We can alternatively express this as a window function \(w\) times the image in a larger solution space.

Now let’s assume we have estimated the object \(o\) and PSF \(h\) perfectly, that assumption is never practically satisfied, but if it was we want the algorithm to stop updating. In that idealized case the term \(o_{k}*h=i^`\) will be \(i\) within the window (remember we are assuming no noise). And if we have a perfect estimate, we no longer want to change it, so the update term of the Richardson Lucy equation should be 1 everywhere.

Solving the above equation gives us an expression for the normalization factor \(n\) which will lead to an update term of all 1s when the estimate \(o_k\) equals the true solution in an ideal simulation. In practical cases I have found this normalization factor is needed for proper edge handling.

Define functions for PSF, forward model and RIchardson Lucy#

This example has been designed to work in a conda environment that has numpy, matplotlib and skimage, and doesn’t need complicated dependencies. To make this example relatively independent of the experimental modules, In the next few blocks we define functions for

A forward model -> Convolution + Poisson Noise

A paraxial PSF

2D Richardson Lucy (on the CPU) using Numpy

A 2d image plotting helper

The paraxial PSF function and forward model function were inspired by Matlab code by James Maton that can be found here

Define Richardson Lucy with an optional normalization factor#

Below we define a modified version of Richardson Lucy which takes in an optional normalization factor and a first guess.

import numpy as np

import math

from numpy.fft import rfftn, irfftn, fftshift, ifftshift, ifftn, fftn

from numpy.random import poisson

import matplotlib.pyplot as plt

def richardson_lucy_np(image, psf, num_iters, normal=None, first_guess=None):

image=image.astype('float64')

psf=psf.astype('float64')

delta = 0.00001

otf = rfftn(ifftshift(psf))

otf_ = np.conjugate(otf)

if (first_guess is None):

if (normal is not None):

estimate=normal

estimate=estimate*image.sum()/estimate.sum()

estimate[normal<0.01]=0

else:

estimate=image.copy()

else:

estimate=first_guess

for i in range(num_iters):

reblurred = irfftn(rfftn(estimate) * otf)

ratio = image / (reblurred + 1e-10)

estimate = estimate * (irfftn(rfftn(ratio) * otf_))

if normal is not None:

estimate=np.divide(estimate, normal, where=normal>delta)

estimate[normal<delta]=0

return estimate

Define forward, psf, pad and imshow functions#

Note in this example the forward model includes windowing.

def forward(field, psf, window=None):

'''

Note this function inspired by code from https://github.com/jdmanton/rl_positivity_sim by James Manton

'''

otf = fftn(ifftshift(psf))

field_imaged = ifftn(fftn(field)*otf)

field_imaged = field_imaged

if window!=None:

field_imaged=field_imaged[window]

return field_imaged

def paraxial_otf(n, wavelength, numerical_aperture, pixel_size):

'''

Note this function inspired by code from https://github.com/jdmanton/rl_positivity_sim by James Manton

'''

nx, ny=(n,n)

resolution = 0.5 * wavelength / numerical_aperture

image_centre_x = n / 2 + 1

image_centre_y = n / 2 + 1

x=np.linspace(0,nx-1,nx)

y=np.linspace(0,ny-1,ny)

x=x-image_centre_x

y=y-image_centre_y

X, Y = np.meshgrid(x,y)

filter_radius = 2 * pixel_size / resolution

r = np.sqrt(X*X+Y*Y)

r=r/x.max()

v=r/filter_radius

v = v * (r<=filter_radius)

otf = 2 / np.pi * (np.arccos(v) - v * np.sqrt(1 - v*v))*(r<=filter_radius);

return otf

def paraxial_psf(n, wavelength, numerical_aperture, pixel_size):

otf = paraxial_otf(n, wavelength, numerical_aperture, pixel_size)

psf = fftshift(ifftn(ifftshift(otf)).astype(np.float32))

return psf/psf.sum()

def imshow2d(im, width=8, height=6):

fig, ax = plt.subplots(figsize=(width,height))

ax.imshow(im)

return fig

def pad(img, paddedsize, mode):

""" pad image to paddedsize

Args:

img ([type]): image to pad

paddedsize ([type]): size to pad to

mode ([type]): one of the np.pad modes

Returns:

[nd array]: padded image

"""

padding = tuple(map(lambda i,j: ( math.ceil((i-j)/2), math.floor((i-j)/2) ),paddedsize,img.shape))

return np.pad(img, padding,mode)

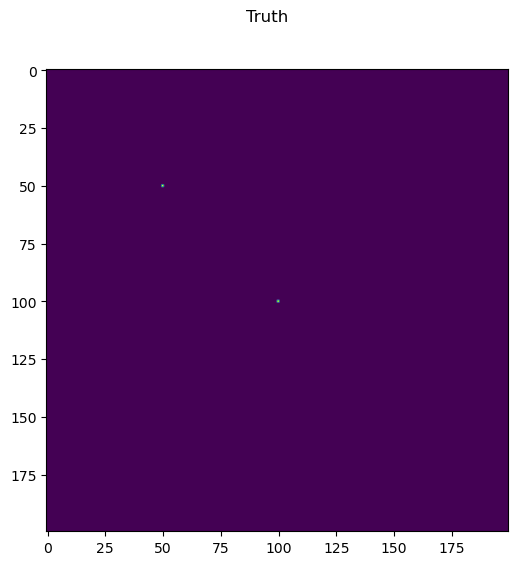

Create ground truth#

Below we have 2 options, the first is a ground truth with 2 points, the second is the star resolution test. Feel free to experiment with additional types of ground truth.

truth_type =0

if truth_type == 0:

n=200

truth=np.zeros([n,n],dtype='float32')

truth[n//4,n//4]=1000

truth[n//2,n//2]=1000

if truth_type == 1:

from skimage.io import imread

im_path='D:/images/i2k2022/deconvolution/'

truth_name='star.tif'

truth=imread(im_path+truth_name)

truth=truth.astype('float32')

n=truth.shape[0]

fig=imshow2d(truth)

fig.suptitle('Truth')

Text(0.5, 0.98, 'Truth')

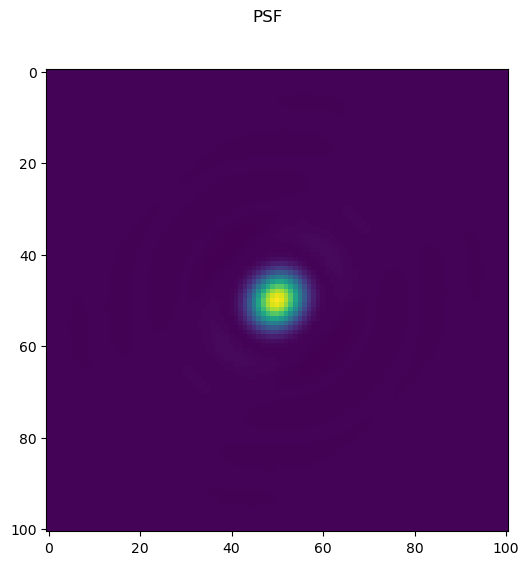

Create the PSF#

m=101

wavelength = 500

na=1.4

pixel_size = 20

psf=paraxial_psf(m, wavelength, na, pixel_size)

psf=psf-psf.min()

psf=psf/psf.sum()

print(psf.min(), psf.max())

fig=imshow2d(psf)

fig.suptitle('PSF')

0.0 0.0060154707

/tmp/ipykernel_28548/2834657628.py:44: ComplexWarning: Casting complex values to real discards the imaginary part

psf = fftshift(ifftn(ifftshift(otf)).astype(np.float32))

Text(0.5, 0.98, 'PSF')

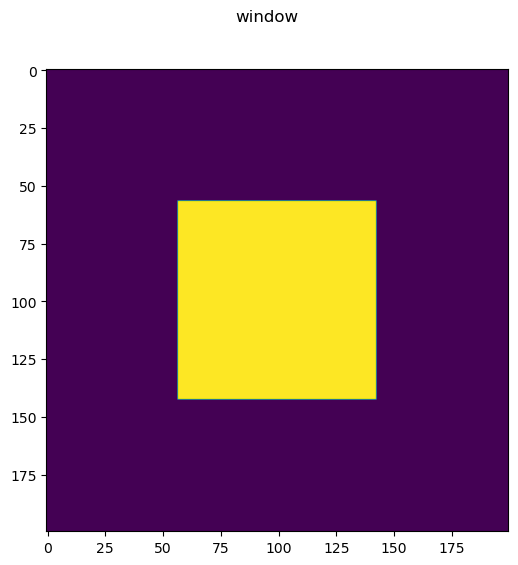

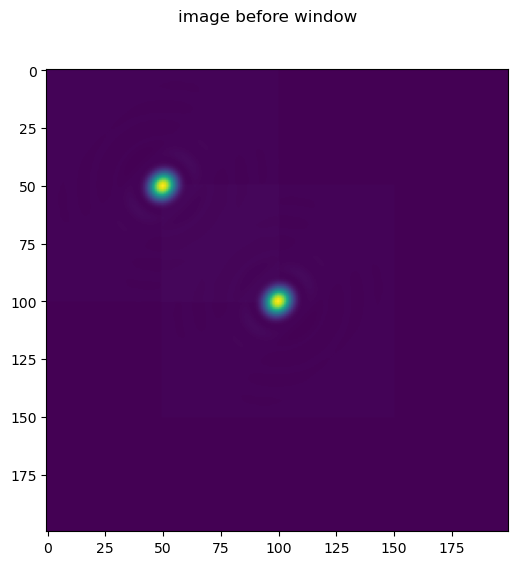

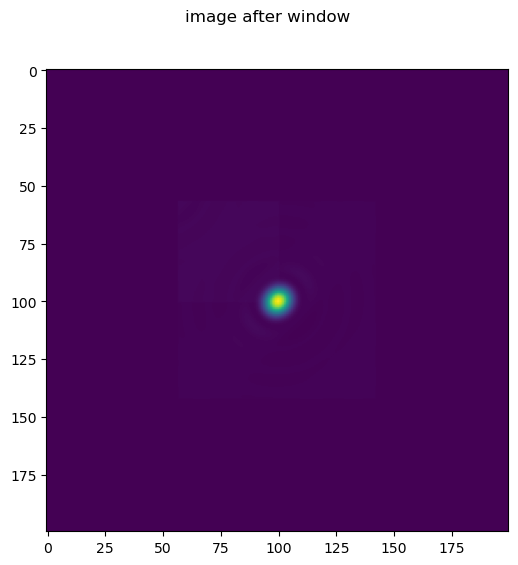

Create the image#

Note in this case we apply a window to the image, thus in the final image we only clearly see one emitter.

psf=pad(psf, truth.shape, 'constant')

ws=7

window_index=np.s_[n//4+ws:3*n//4-ws,n//4+ws:3*n//4-ws]

window=np.zeros_like(truth)

window[window_index]=1

fig = imshow2d(window)

fig.suptitle('window')

im = forward(truth, psf).astype('float32')

fig = imshow2d(im)

fig.suptitle('image before window')

im=im*window

#im = pad(im, truth.shape, 'constant')

fig = imshow2d(im)

fig.suptitle('image after window')

/tmp/ipykernel_28548/4177033410.py:10: ComplexWarning: Casting complex values to real discards the imaginary part

im = forward(truth, psf).astype('float32')

Text(0.5, 0.98, 'image after window')

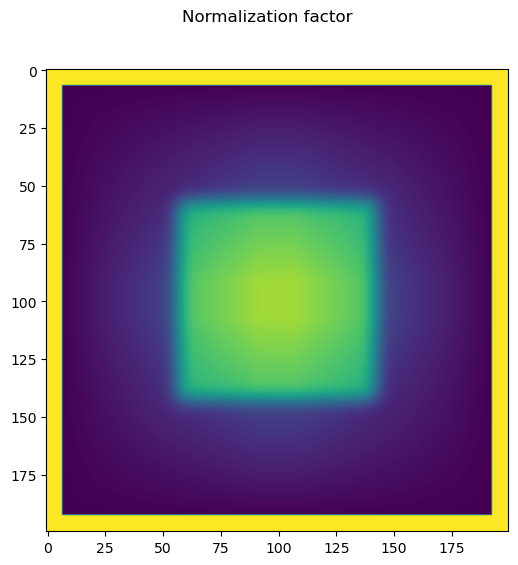

Create the normalization factor#

flat=np.ones_like(im)

flat=flat[window_index]

flat=pad(flat,truth.shape,'constant')

otf = fftn(ifftshift(psf))

otf_ = np.conjugate(otf)

normal=(ifftn(fftn(flat) * otf_)).astype(float)

delta=.00001

normal[normal<delta]=1

fig = imshow2d(normal)

fig.suptitle('Normalization factor')

/tmp/ipykernel_28548/3135814947.py:7: ComplexWarning: Casting complex values to real discards the imaginary part

normal=(ifftn(fftn(flat) * otf_)).astype(float)

Text(0.5, 0.98, 'Normalization factor')

Deconvolve#

We call richardson lucy passing the normalization factor

decon=richardson_lucy_np(im, psf, 500, normal)

fig = imshow2d(decon)

fig.suptitle('deconvolved')

Text(0.5, 0.98, 'deconvolved')

Inspect result in Napari#

import napari

viewer = napari.Viewer()

viewer.add_image(decon)

viewer.add_image(im)

<Image layer 'im' at 0x7f00fdc11e50>

Discussion

After playing with the contrast of the images in Napari do you notice anything strange?

What could be done to make this example better?